Ce guide couvre l’ensemble du processus d’installation et de configuration d’un cluster Kubernetes dans un environnement d’entreprise, avec un accent particulier sur la sécurité et les bonnes pratiques.

1. Architecture et prérequis

Architecture cible

- 1 nœud maître

- 2 nœuds workers

- Réseau overlay Calico

- OS : Debian 12 (Bookworm)

- Environnement : VirtualBox

Configuration matérielle minimale recommandée

Nœud maître

- CPU: 2vCPU minimum

- RAM: 4Go minimun

- Disque: 50 Go mimimum

Nœuds workers

- CPU: 4vCPU minimum

- RAM: 8Go minimun

- Disque: 100 Go mimimum

Configuration système initiale (sur tous les nœuds)

# Mise à jour initiale du système

sudo apt update && sudo apt upgrade -y

sudo apt install -y apt-transport-https ca-certificates curl gnupg lsb-release systemd-timesyncd

# Activation du service NTP

sudo systemctl enable systemd-timesyncd

sudo systemctl start systemd-timesyncd

# Désactivation du swap (obligatoire pour Kubernetes)

sudo swapoff -a

sudo sed -i '/ swap / s/^/#/' /etc/fstab

# Chargement des modules noyau nécessaires

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

# Paramétrage du réseau pour Kubernetes

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

sudo sysctl --system

2. Installation des composants de base

Sur tous les nœuds, installez containerd.

sudo apt install -y containerd

# Generation of the default configuration file

sudo mkdir -p /etc/containerd

containerd config default | sudo tee /etc/containerd/config.toml

# Activate Systemd mode to manage cgroups

sudo sed -i 's/SystemdCgroup = false/SystemdCgroup = true/' /etc/containerd/config.toml

sudo systemctl restart containerd

# Addition of the public key and the official repository

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.31/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.31/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list

# Installing kubernetes packages

sudo apt update

sudo apt install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectl

3. Initialisation du cluster

Initialisez le cluster sur le nœud maître en spécifiant le réseau de pods Calico et l’endpoint de contrôle.

sudo kubeadm init --pod-network-cidr=192.168.0.0/16 --control-plane-endpoint="MASTER_IP:6443" --upload-certs

# Configuration pour l’utilisateur non root

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

# Installation de Calico

kubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.26.1/manifests/calico.yaml

On master node, use the kubeadm join command supplied when the master was initialised.

sudo kubeadm join MASTER_IP:6443 --token <token> --discovery-token-ca-cert-hash sha256:<hash>

4. Configuration post‑installation

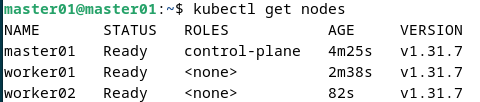

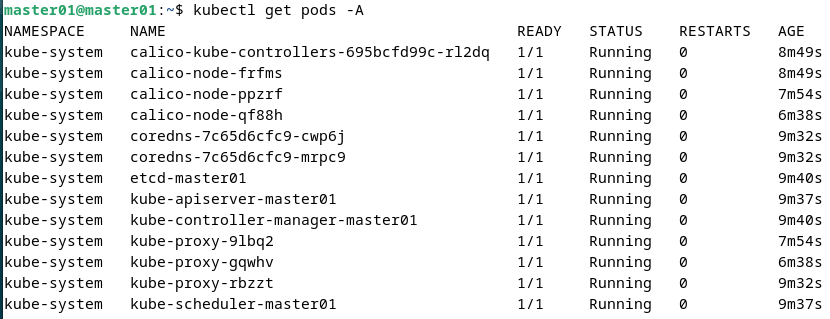

Sur le nœud maître, vérifiez que le cluster fonctionne correctement.

kubectl get nodes

kubectl get pods -A

Pour mettre en place un stockage local, déployez le local-path-provisioner

kubectl apply -f https://raw.githubusercontent.com/rancher/local-path-provisioner/master/deploy/local-path-storage.yaml

kubectl patch storageclass local-path -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

5. Sécurisation du cluster

Sur le nœud maître, créez un utilisateur à accès limité en générant et en signant un certificat.

# Génération de la clé et de la demande de certificat (CSR)

openssl genrsa -out mbogning.key 2048

openssl req -new -key mbogning.key -out mbogning.csr -subj "/CN=mbogning/O=team1"

# Signature du certificat avec l’AC du cluster

sudo openssl x509 -req -in mbogning.csr \

-CA /etc/kubernetes/pki/ca.crt \

-CAkey /etc/kubernetes/pki/ca.key \

-CAcreateserial \

-out mbogning.crt -days 365

# Créez un namespace dédié (ex. production) et définissez des rôles restreints

kubectl create namespace production

# Création d’un rôle limitant les actions sur les pods

kubectl create role pod-reader --verb=get,list,watch --resource=pods --namespace=production

kubectl create rolebinding pod-reader-binding --role=pod-reader --user=mbogning --namespace=production

# Créez un contexte kubeconfig pour l’utilisateur

kubectl config set-credentials mbogning \

--client-certificate=mbogning.crt \

--client-key=mbogning.key

kubectl config set-context mbogning-context \

--cluster=kubernetes \

--namespace=production \

--user=mbogning

# Mise en place des Network Policies

cat <<EOF | kubectl apply -f -

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default-deny-ingress

namespace: production

spec:

podSelector: {}

policyTypes:

- Ingress

EOF

6. Supervision (monitoring) et journalisation (logging)

Sur le nœud maître. Helm facilite l’installation et la gestion d’applications sur Kubernetes.

curl https://raw.githubusercontent.com/helm/helm/master/scripts/get-helm-3 | bash

helm version

Mise en place du monitoring avec Prometheus et Grafana

Ajoutez le dépôt Helm dédié et déployez la stack de monitoring dans le namespace monitoring.

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

helm repo update

kubectl create namespace monitoring

helm install prometheus prometheus-community/kube-prometheus-stack \

--namespace monitoring \

--set prometheusOperator.createCustomResource=true \

--set grafana.enabled=true

Mise en place du logging avec Loki

Pour une solution de logs légère intégrée à Grafana, installez Loki et Promtail.

helm repo add grafana https://grafana.github.io/helm-charts

helm repo update

kubectl create namespace logging

helm install loki grafana/loki-stack \

--namespace logging \

--set promtail.enabled=true \

--set loki.persistence.enabled=true \

--set loki.persistence.size=10Gi \

--set grafana.enabled=false

Configurer Grafana pour utiliser Loki comme source de données

Créez un ConfigMap pour intégrer Loki à Grafana.

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: ConfigMap

metadata:

name: loki-datasource

namespace: monitoring

labels:

grafana_datasource: "1"

data:

loki-datasource.yaml: |-

apiVersion: 1

datasources:

- name: Loki

type: loki

url: http://loki.logging.svc.cluster.local:3100

access: proxy

isDefault: false

EOF

Redémarrez le déploiement Grafana pour appliquer les changements.

kubectl rollout restart deployment -n monitoring prometheus-grafana

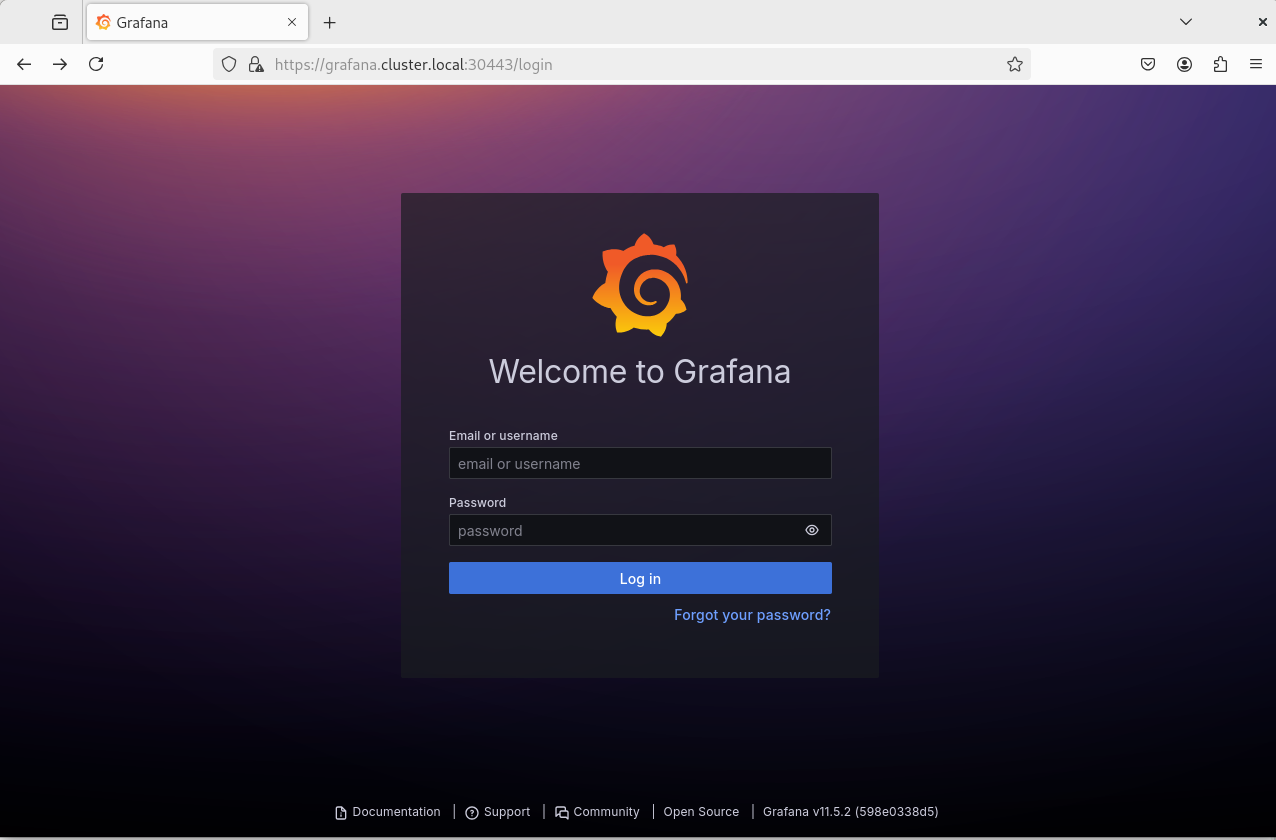

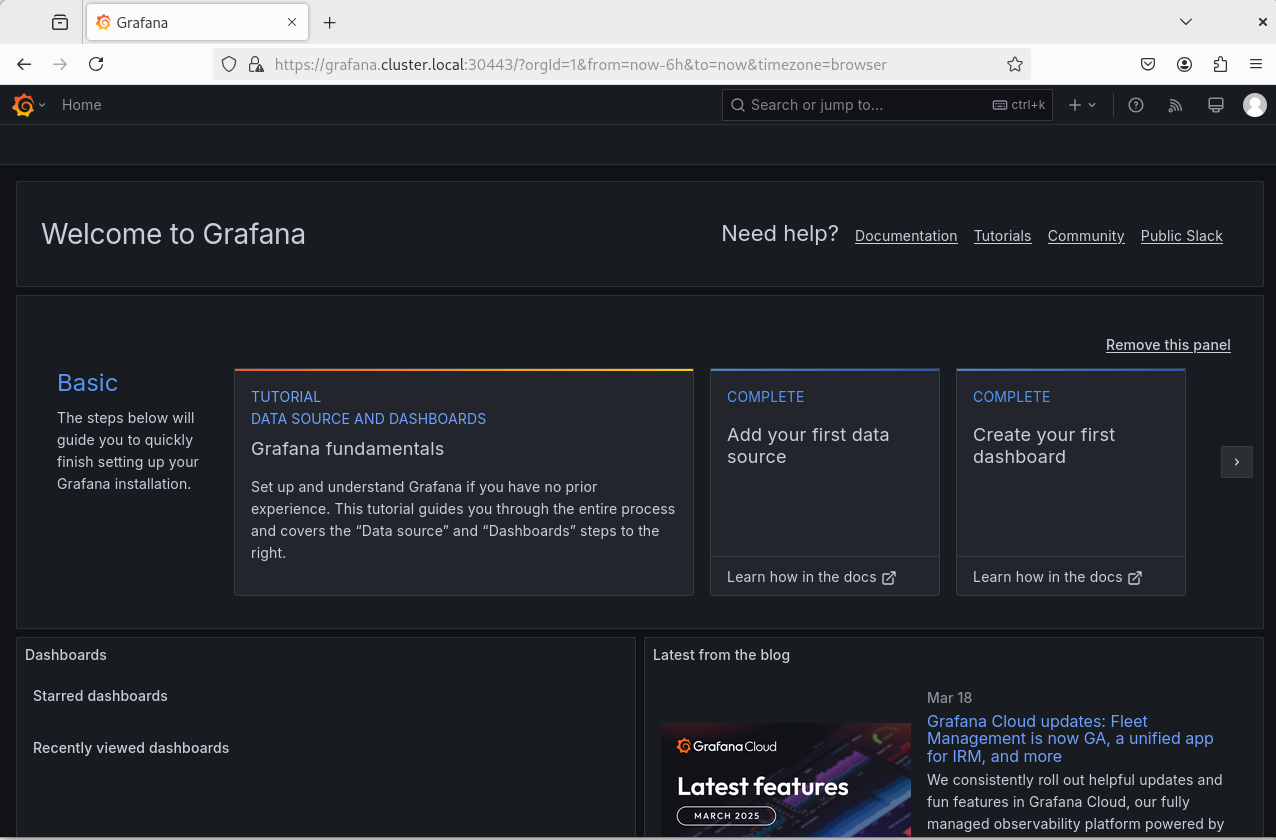

Accès aux interfaces de supervision

Méthode temporaire (Port‑forward)

Pour un accès rapide, utilisez les commandes suivantes.

# Access to Grafana

kubectl port-forward -n monitoring svc/prometheus-grafana 3000:80

# Access to Prometheus

kubectl port-forward -n monitoring svc/prometheus-kube-prometheus-prometheus 9090:9090

Puis accédez à :

- Grafana: http://localhost:3000 (default credentials: admin/prom-operator)

- Prometheus: http://localhost:9090

Accès permanent via Ingress et HTTPS

- Installing the NGINX Ingress Controller For a production solution, deploy the NGINX Ingress controller and cert-manager to manage TLS certificates.

helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx

helm repo update

helm install nginx-ingress ingress-nginx/ingress-nginx \

--namespace ingress-nginx \

--create-namespace \

--set controller.service.type=NodePort \

--set controller.service.nodePorts.http=30080 \

--set controller.service.nodePorts.https=30443

2. Installing cert-manager

helm repo add jetstack https://charts.jetstack.io

helm repo update

helm install cert-manager jetstack/cert-manager \

--namespace cert-manager \

--create-namespace \

--set installCRDs=true

3. Creating a Let’s Encrypt ClusterIssuer

cat <<EOF | kubectl apply -f -

apiVersion: cert-manager.io/v1

kind: ClusterIssuer

metadata:

name: letsencrypt-prod

spec:

acme:

server: https://acme-v02.api.letsencrypt.org/directory

email: admin@votredomaine.com

privateKeySecretRef:

name: letsencrypt-prod

solvers:

- http01:

ingress:

class: nginx

EOF

4. Deployment of Ingress Rules for Grafana and Prometheus

- Ingress for Grafana

cat <<-EOF | kubectl apply -f -

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: grafana-ingress

namespace: monitoring

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/ssl-redirect: "true"

cert-manager.io/cluster-issuer: "letsencrypt-prod"

spec:

tls:

- hosts:

- grafana.cluster.local

secretName: grafana-tls

rules:

- host: grafana.cluster.local

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: prometheus-grafana

port:

number: 80

EOF

- Ingress for Prometheus

cat <<EOF | kubectl apply -f -

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: prometheus-ingress

namespace: monitoring

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/ssl-redirect: "true"

cert-manager.io/cluster-issuer: "letsencrypt-prod"

spec:

tls:

- hosts:

- prometheus.cluster.local

secretName: prometheus-tls

rules:

- host: prometheus.cluster.local

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: prometheus-kube-prometheus-prometheus

port:

number: 9090

EOF

DNS configuration

Modify the /etc/hosts file on the machines that will access the cluster.

Copy : 192.168.X.X grafana.cluster.local prometheus.cluster.local

Replace 192.168.X.X with the IP address of the node where the Ingress controller is exposed.

Access Grafana at :

https://grafana.cluster.local:30443

Log in using the default logins (admin/prom-operator)

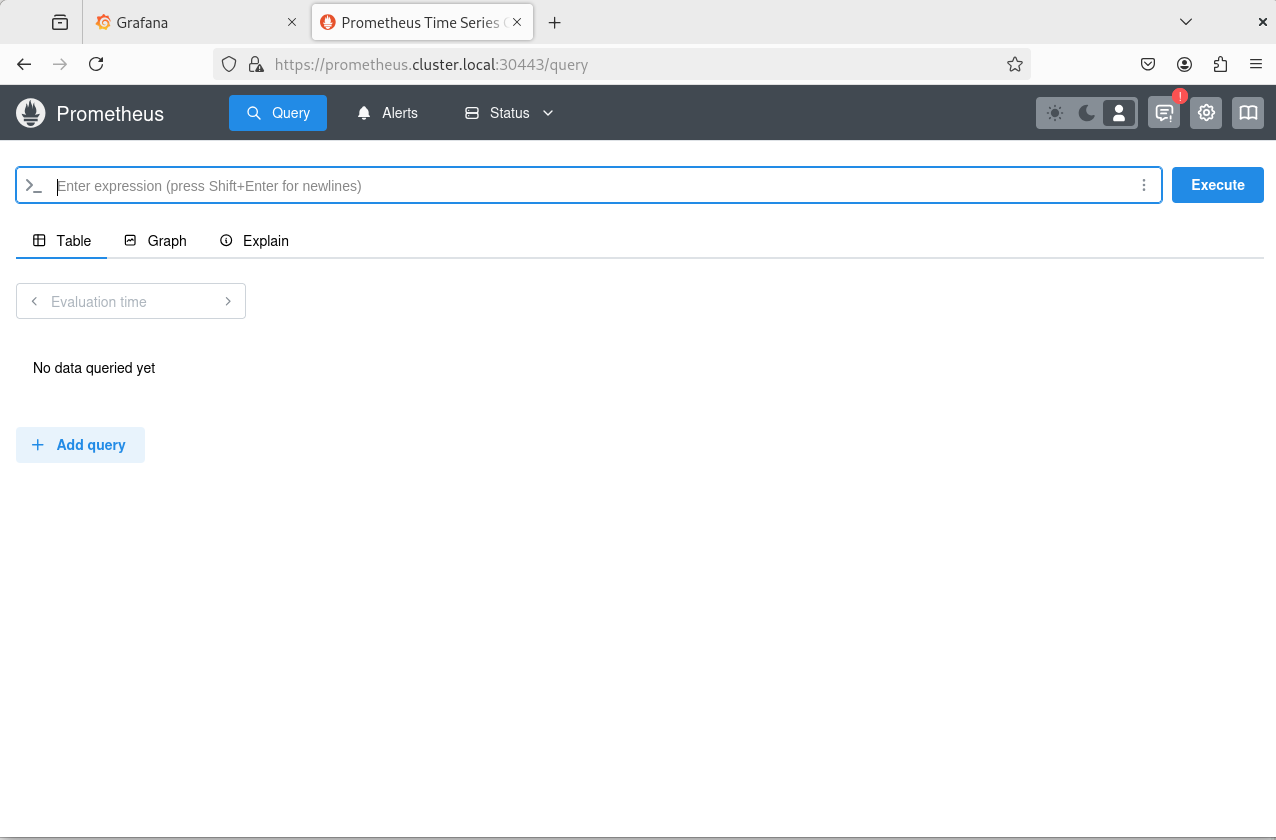

Access Prometheus at :

https://prometheus.cluster.local:30443

7. Maintenance and backup

On the master node, make sure you install the etcd-client tool and configure regular backup of the cluster.

sudo apt install -y etcd-client

sudo mkdir -p /backup

sudo ETCDCTL_API=3 etcdctl --endpoints=https://127.0.0.1:2379 \

--cacert=/etc/kubernetes/pki/etcd/ca.crt \

--cert=/etc/kubernetes/pki/etcd/server.crt \

--key=/etc/kubernetes/pki/etcd/server.key \

snapshot save /backup/etcd-snapshot-$(date +%Y%m%d).db

8. Validation and testing

Run the following commands to check health and configuration.

kubectl get nodes

kubectl get pods -A

kubectl get componentstatuses

Verification of Monitoring and Logging Services

kubectl get pods -n monitoring

kubectl get pods -n logging

Documentation

- Documentation officielle Kubernetes

- Documentation Calico

- Documentation Grafana and Prometheus

- Guide CIS Kubernetes Benchmark

Conclusion

This comprehensive guide has enabled you to:

- Deploy a robust Kubernetes cluster under Debian 12 with a master/worker architecture suited to enterprise environments.

- Ensure inter-node communication thanks to a careful VirtualBox configuration and the use of Calico.

- Secure the cluster by setting up restricted access via certificates, RBAC and network policies.

- Implement a monitoring and logging solution integrated with Prometheus, Grafana and Loki, as well as secure access to interfaces via Ingress and HTTPS.

- Plan maintenance with regular Etcd backups.